In What Category Camera Would Be Fit In Accounting Language

Did y'all know that there's one mistake…

…that thousands of data science beginners unknowingly commit?

And that this error tin can single-handedly ruin your machine learning model?

No, that's not an exaggeration. We're talking about one of the trickiest obstacles in applied machine learning: overfitting.

But don't worry:

In this guide, we'll walk you through exactly what overfitting means, how to spot it in your models, and what to do if your model is overfit.

By the end, yous'll know how to bargain with this tricky problem once and for all.

Table of Contents

- Examples of Overfitting

- Signal vs. Racket

- Goodness of Fit

- Overfitting vs. Underfitting

- How to Detect Overfitting

- How to Preclude Overfitting

- Boosted Resources

Examples of Overfitting

Permit'southward say we want to predict if a student will state a job interview based on her resume.

Now, assume nosotros train a model from a dataset of x,000 resumes and their outcomes.

Adjacent, we try the model out on the original dataset, and information technology predicts outcomes with 99% accuracy… wow!

But now comes the bad news.

When we run the model on a new ("unseen") dataset of resumes, we only get 50% accuracy… uh-oh!

Our model doesn't generalize well from our training information to unseen data.

This is known as overfitting, and it'due south a common problem in machine learning and data science.

In fact, overfitting occurs in the real globe all the time. You but need to plough on the news channel to hear examples:

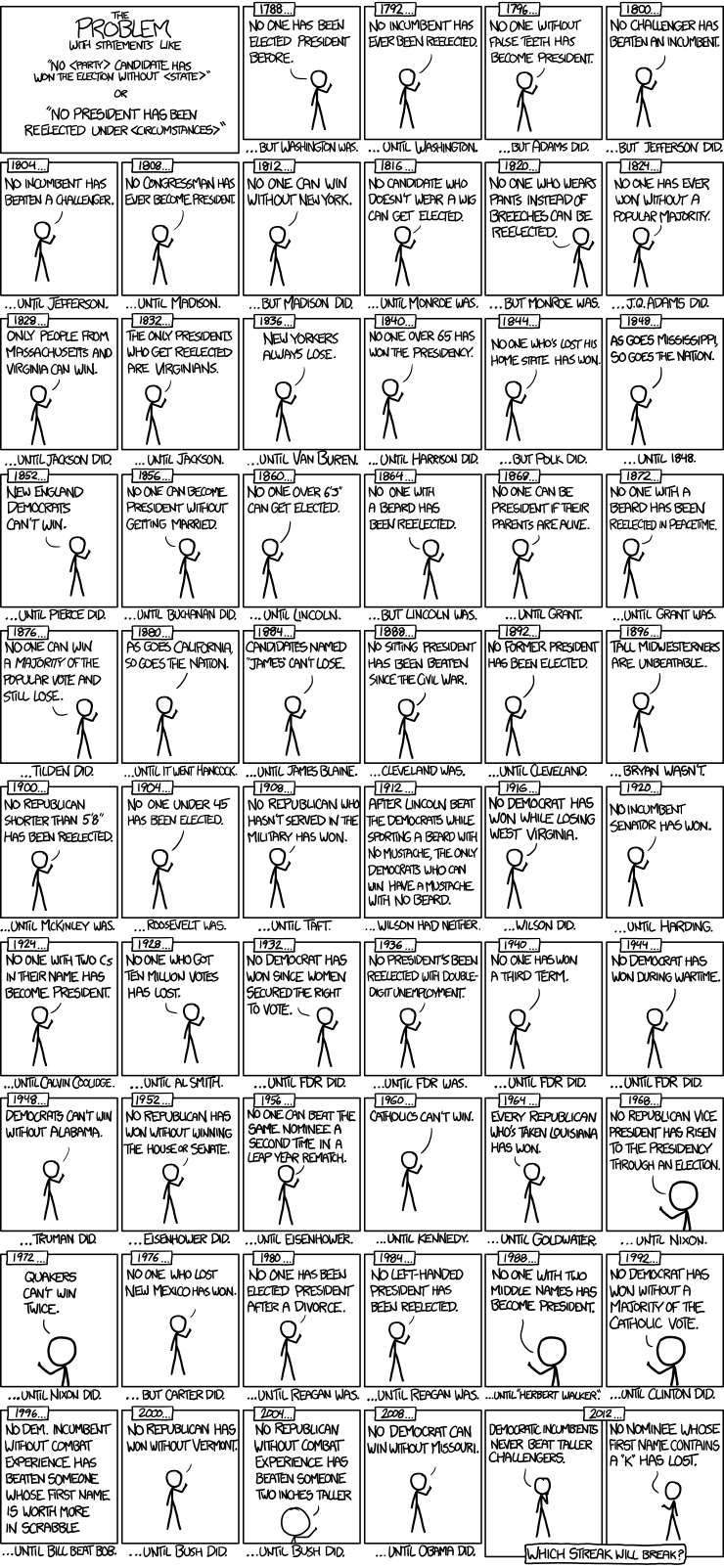

Overfitting Electoral Precedence (source: XKCD)

Betoken vs. Dissonance

You may have heard of the famous book The Bespeak and the Noise past Nate Silver.

In predictive modeling, y'all tin can think of the "signal" every bit the true underlying pattern that you wish to acquire from the data.

"Noise," on the other paw, refers to the irrelevant information or randomness in a dataset.

For example, let's say you lot're modeling summit vs. age in children. If you lot sample a large portion of the population, you lot'd find a pretty articulate relationship:

Height vs. Age (source: CDC)

This is the indicate.

However, if you could only sample one local school, the relationship might exist muddier. It would exist affected past outliers (east.yard. kid whose dad is an NBA thespian) and randomness (e.g. kids who hit puberty at unlike ages).

Noise interferes with signal.

Here'south where machine learning comes in. A well operation ML algorithm will separate the signal from the noise.

If the algorithm is too complex or flexible (e.yard. it has likewise many input features or it'southward non properly regularized), it tin can end up "memorizing the noise" instead of finding the indicate.

This overfit model will then brand predictions based on that noise. It will perform unusually well on its preparation data… yet very poorly on new, unseen data.

Goodness of Fit

In statistics, goodness of fit refers to how closely a model's predicted values lucifer the observed (truthful) values.

A model that has learned the racket instead of the bespeak is considered "overfit" considering it fits the grooming dataset merely has poor fit with new datasets.

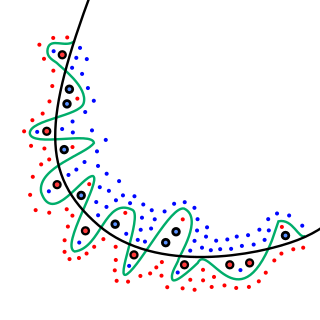

While the black line fits the data well, the dark-green line is overfit.

Overfitting vs. Underfitting

We can empathize overfitting amend past looking at the contrary problem, underfitting.

Underfitting occurs when a model is too simple – informed past besides few features or regularized too much – which makes it inflexible in learning from the dataset.

Elementary learners tend to have less variance in their predictions but more bias towards wrong outcomes (see: The Bias-Variance Tradeoff).

On the other mitt, complex learners tend to have more variance in their predictions.

Both bias and variance are forms of prediction error in car learning.

Typically, we can reduce error from bias simply might increment fault from variance as a result, or vice versa.

This merchandise-off betwixt as well elementary (high bias) vs. also complex (high variance) is a key concept in statistics and machine learning, and 1 that affects all supervised learning algorithms.

Bias vs. Variance (source: EDS)

How to Observe Overfitting

A primal challenge with overfitting, and with machine learning in general, is that we can't know how well our model will perform on new data until we actually test it.

To address this, we tin can carve up our initial dataset into separate preparation and test subsets.

Train-Examination Split up

This method tin can gauge of how well our model will perform on new data.

If our model does much improve on the preparation gear up than on the test set, then nosotros're likely overfitting.

For example, information technology would be a large red flag if our model saw 99% accurateness on the grooming set but merely 55% accuracy on the test set.

If you'd like to see how this works in Python, we accept a full tutorial for car learning using Scikit-Learn.

Another tip is to kickoff with a very simple model to serve as a benchmark.

Then, as you lot endeavor more circuitous algorithms, you'll have a reference point to see if the additional complexity is worth it.

This is the Occam's razor test. If ii models take comparable operation, so you should usually pick the simpler one.

How to Prevent Overfitting

Detecting overfitting is useful, simply it doesn't solve the problem. Fortunately, you have several options to try.

Here are a few of the most popular solutions for overfitting:

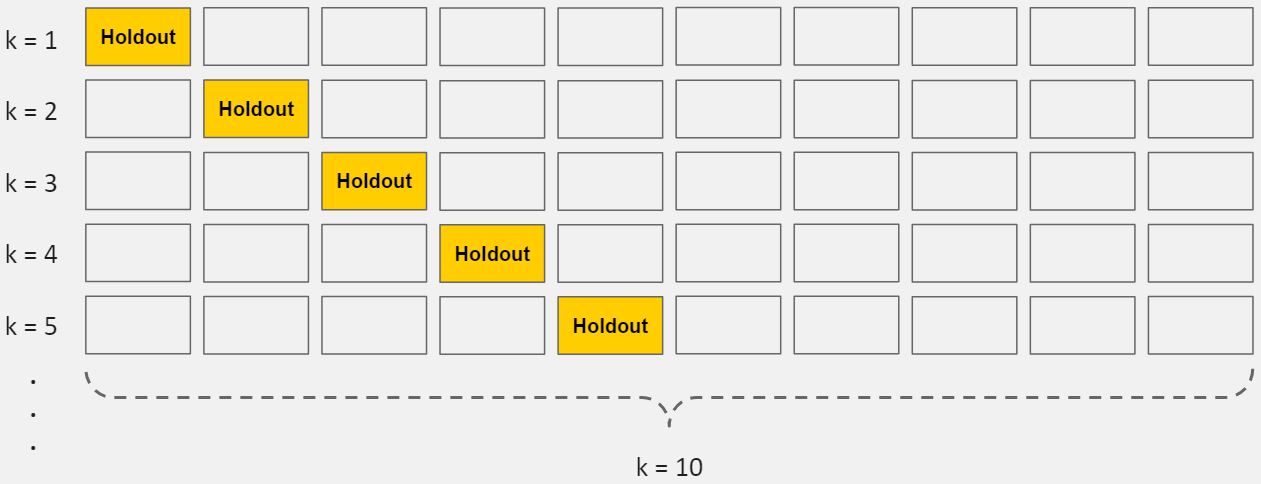

Cantankerous-validation

Cross-validation is a powerful preventative measure against overfitting.

The idea is clever: Employ your initial grooming information to generate multiple mini railroad train-examination splits. Use these splits to tune your model.

In standard k-fold cross-validation, we partition the information into k subsets, called folds. Then, we iteratively railroad train the algorithm on chiliad-i folds while using the remaining fold as the test set (chosen the "holdout fold").

K-Fold Cross-Validation

Cross-validation allows you to tune hyperparameters with only your original preparation gear up. This allows you to proceed your test prepare equally a truly unseen dataset for selecting your terminal model.

We have another article with a more detailed breakdown of cross-validation.

Train with more information

Information technology won't piece of work every time, but preparation with more data can help algorithms find the signal better. In the earlier example of modeling height vs. age in children, it's clear how sampling more than schools volition help your model.

Of course, that's not always the case. If nosotros just add together more noisy data, this technique won't help. That's why you should always ensure your data is clean and relevant.

Remove features

Some algorithms take built-in feature selection.

For those that don't, you can manually improve their generalizability past removing irrelevant input features.

An interesting mode to do and so is to tell a story about how each feature fits into the model. This is similar the information scientist'south spin on software engineer'south condom duck debugging technique, where they debug their code by explaining it, line-by-line, to a rubber duck.

If anything doesn't make sense, or if it'due south hard to justify certain features, this is a good fashion to identify them.

In add-on, there are several feature selection heuristics you tin apply for a practiced starting signal.

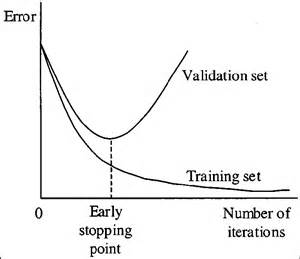

Early stopping

When you're grooming a learning algorithm iteratively, yous can measure how well each iteration of the model performs.

Upward until a sure number of iterations, new iterations meliorate the model. Subsequently that bespeak, all the same, the model'due south ability to generalize can weaken as information technology begins to overfit the grooming data.

Early stopping refers stopping the training process before the learner passes that signal.

Today, this technique is mostly used in deep learning while other techniques (east.g. regularization) are preferred for classical auto learning.

Regularization

Regularization refers to a wide range of techniques for artificially forcing your model to exist simpler.

The method will depend on the blazon of learner you're using. For example, yous could prune a conclusion tree, apply dropout on a neural network, or add a penalization parameter to the cost role in regression.

Frequently, the regularization method is a hyperparameter besides, which means it tin be tuned through cross-validation.

We have a more detailed discussion here on algorithms and regularization methods.

Ensembling

Ensembles are machine learning methods for combining predictions from multiple separate models. There are a few different methods for ensembling, simply the ii near common are:

Bagging attempts to reduce the hazard overfitting complex models.

- It trains a large number of "strong" learners in parallel.

- A potent learner is a model that's relatively unconstrained.

- Bagging then combines all the strong learners together in order to "polish out" their predictions.

Boosting attempts to improve the predictive flexibility of unproblematic models.

- It trains a large number of "weak" learners in sequence.

- A weak learner is a constrained model (i.e. you could limit the max depth of each conclusion tree).

- Each i in the sequence focuses on learning from the mistakes of the one before it.

- Boosting then combines all the weak learners into a single strong learner.

While bagging and boosting are both ensemble methods, they approach the trouble from opposite directions.

Bagging uses circuitous base models and tries to "smoothen out" their predictions, while boosting uses simple base of operations models and tries to "boost" their amass complexity.

Next Steps

Whew! We just covered quite a few concepts:

- Signal, noise, and how they relate to overfitting.

- Goodness of fit from statistics

- Underfitting vs. overfitting

- The bias-variance tradeoff

- How to detect overfitting using train-examination splits

- How to prevent overfitting using cantankerous-validation, feature selection, regularization, etc.

Hopefully seeing all of these concepts linked together helped clarify some of them.

To truly principal this topic, we recommend getting hands-on practice.

While these concepts may experience overwhelming at first, they will 'click into identify' once you get-go seeing them in the context of real-globe code and problems.

So hither are some boosted resources to assist yous get started:

- Python machine learning tutorial

- Plotting overfitting and underfitting with Scikit-Learn

- More real-world examples of overfitting

Now, go along and learn! (Or take your code practise it for yous!)

Source: https://elitedatascience.com/overfitting-in-machine-learning

Posted by: carterhinatimsee.blogspot.com

0 Response to "In What Category Camera Would Be Fit In Accounting Language"

Post a Comment